February 12, 2024, by Laura Nicholson

Strategies to Identify Misinformation: Videos

Of the three online information sources discussed over the past few weeks, video tends to be the most difficult to authenticate. With video creation tools becoming more detailed and complex, so too do fake videos, which become easier to make and harder for us to detect.

The usual strategies of employing a critical mindset when viewing online content still apply, and there are a few tried-and-tested strategies you can employ too.

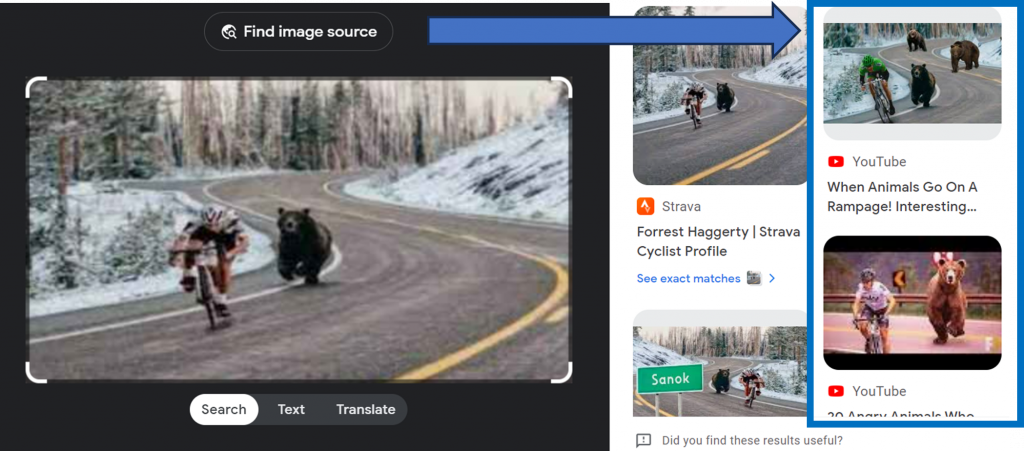

Reverse image search

Take a screenshot of the frame of the video you are suspicious of and do a reverse image search. On trialling this strategy, I uploaded what I believed to be a fake image, but I soon discovered from the list of hits that it was actually a frame taken from a video.

Controversial content

Be on your guard if the content causes controversy or seems to evoke negative feelings. Often, content can be deliberately designed to create anger in order to manipulate points of view, so be wary as it could be fake.

Author

With video hosting platforms, it isn’t always easy to identify an author’s credentials, as they will often go by a pseudonym or nickname. However, take a look at their followers; do they give any indications about the type of content this person usually posts?

Additionally, if someone only has a few followers but consistently posts a high level of content, you should also be wary. This could suggest they just copy a lot of content from others and use it without checking its credibility.

Comments

Check the comments and number of likes on the video content. People are usually quick to call something out as fake, so this can be a good indication that the video is promoting misinformation.

Purpose

Beware of the underlying reason for the video. Sometimes creators can get us hooked in and provide some very convincing arguments before presenting us with a call to action, which is often enticing us to make a purchase from them or someone they are being paid to endorse.

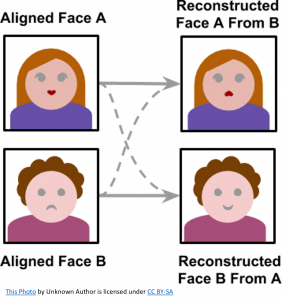

Photoplethysmography (PPG)

I appreciate technique isn’t freely available, but I thought it would be interesting to reveal what some of the more expensive high-tech systems can do to detect computer-created artificial content, in particular face swapping.

Deepfake videos that use artificial intelligence to misrepresent people and events are becoming harder to detect, even for experts (Kobis et al., 2021). Interesting research has taken place, which reveals the lengths researchers are going to go to uncover the truth. For example, Intel’s “FakeCatcher” system uses a technique called photoplethysmography (PPG), which basically detects changes in blood flow in a person’s face (Ciftci et al., 2020). Intel’s detection also looked for authentic eye movement, as deepfake videos can’t produce the right eye movement and often have the eyes going in all directions rather than in a straight line. The system did struggle when presented with more pixelated videos, but with a 96% accuracy rate (BBC News 2023), that’s still impressive.

Interesting stuff! Although one can’t help feeling that sooner or later, deepfake video creation will master the art of including blood flow and eye tracking more effectively, I guess we’ll just have to move onto the next strategy, highlighting the ongoing challenge of staying ahead of those who spread misinformation.

References

BBC News. (2023) Inside the system using blood flow to detect deepfake video – BBC News. Available at: https://www.youtube.com/watch?v=aPTnq_1hWDE (Accessed 30/01/2024)

Ciftci,U.A., Demir, I. and Yin,L. (2020) ‘FakeCatcher: Detection of Synthetic Portrait Videos using Biological Signals,’ in IEEE Transactions on Pattern Analysis and Machine Intelligence. 2020 July 15. doi: 10.1109/TPAMI.2020.3009287.

Köbis, N.C., Doležalová, B. and Soraperra, I. (2021) ‘Fooled twice: People cannot detect deepfakes but think they can’. iScience. 2021 Oct 29;24(11):103364. doi: 10.1016/j.isci.2021.103364

No comments yet, fill out a comment to be the first

Leave a Reply