January 31, 2019, by Stuart Moran

Mapping Ethical Challenges in the Internet of Things for Research

Dr Lachlan Urquhart (Lecturer in Technology Law, University of Edinburgh) and Dr Martin Flintham (Assistant Professor in Computer Science, University of Nottingham). Lachlan.urquhart@ed.ac.uk and martin.flintham@nottingham.ac.uk Jan 2019.

Towards the Internet of Things

The door refused to open.

It said, “Five cents, please.”

He searched his pockets. No more coins; nothing. “I’ll pay you tomorrow,” he told the door. Again he tried the knob. Again it remained locked tight. “What I pay you,” he informed it, “is in the nature of a gratuity; I don’t have to pay you.”

“I think otherwise,” the door said. “Look in the purchase contract you signed when you bought this conapt.”

Philip K Dick’s 1969 vision of the 1990s in Ubik might be said to capture elements of the modern world of smart devices but also some of the concerns about how they are, perhaps unwittingly, integrated into our everyday lives. The Internet of Things (IoT) marks the next stage in evolution of computers past the personal computer and the mobility eras, where one person might have one or a few computers, to the ubiquity era, where one person might have hundreds, thousands or eventually millions of computers at their fingertips. Ubiquitous computing is where computing is so interwoven into the fabric of everyday life that it is indistinguishable from it, or, as Mark Weiser puts it, where computing does not live on a personal device of a particular sort, but is in the woodwork everywhere. Since the 2000s, the majority of microprocessors aren’t in what we would identify as computers, but are in things; washing machines, TVs, car engine management systems, clothing tags. The Internet of Things takes ubiquitous computing and once again makes it visible through “smart” devices; everyday things augmented with computation and connectivity. They both sense the world and are sensable in the world, enabling notions of presence and identity, measuring location and features of the physical world. They attempt to infer their local context through ambient intelligence, predicting needs and reacting accordingly, or passively recording, tracking and monitoring.

The smart home marks a coordinated industry programme to bring IoT technologies, and the associated service platforms to which they connect, into the home. These devices span heating, security, entertainment, lighting and appliances. The most prominent, the voice operated smart speaker, has been installed in over 100 million homes to date. In a different context, the IoT promises to enhance manufacturing through the tracking of products and material through the supply chain, and via measurement and autonomy of the factory.

The deployment of Internet of Things technologies is increasing within the University environment too, as researchers, administrators and operational support services embrace new opportunities to understand and enhance the world around us through captured data and automation. In research, the IoT can enable new avenues for data collection in “in the wild” contexts, for example in the home or factory floor, or facilitate previously analogue activities to be performed digitally, or at scale. Taken at face value, at an institutional level the IoT promises much in terms of understanding the needs of academics and students for facilities and infrastructure, or managing access to resources.

Whilst the IoT holds great promise and great appeal to many, it is not without its risks. There are risks to privacy and security that need to be thought through and mitigated as early as possible, and indeed increasingly are legally required to be considered. The General Data Protection Regulation 2016 even legally mandates data protection by design and default, requiring data controllers to put in place organisational and technical measures to protect information privacy rights of data subjects. Moreover, as with any new technology, care must be taken to ensure that it is indeed providing a suitable solution to a known problem, co-created with and by end users and other stakeholders from the outset. Our goal, then, within this Digital Research funded project is to explore some of the challenges around ideating and evaluating the IoT with stakeholders within the University, to unpack the risks and how they should be dealt with in practice.

Mapping Workshops

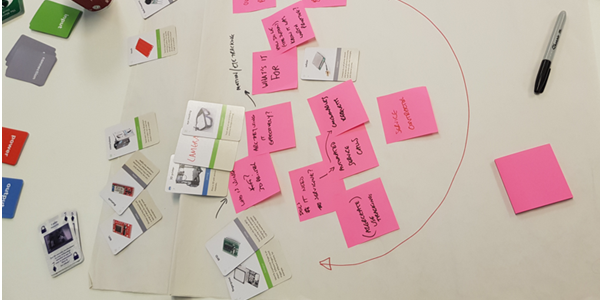

In autumn 2018, we ran workshops using a range of card-based tools, in particular helping participants to map the ethical challenges around use of IoT devices in the research/university context. This involved inviting a range of participants from different parts of the university working in operational IT through to research to use various card decks to both create new IoT systems that could be developed in the university and would be useful for their everyday practices, and to then evaluate the ethical risks they might create.

Figure 1: Unpacking IoT Use Cases

This involved using more creative decks such as our older Privacy by Design cards[1], Dixit cards, Know Cards for IoT design and Ideation, and even Brian Eno’s Oblique Strategies. After this, we asked participants to evaluate their IoT systems using our Moral-IT and Legal-IT decks of cards[2], a design tool developed within Horizon to prompt critical questioning of the ethical risks and support engagement with topics of privacy, law, ethics and security. Importantly, we also used our streamlined ethical impact assessment process[3], which required them to consider the risks, likelihood of occurring, safeguards, and challenges of implementing these safeguards. This helps participants to systematically consider the different aspects of new technologies, and structure their thoughts and ways forward.

We now provide an example of the types of themes that were discussed by one of the groups after designing their system and then evaluating it.

Proposed System: Lab management tracking, particularly of machine use.

This system was proposed to support lab managers and monitor use of lab equipment, taking 3D printers as a relevant case study. Here managing equipment is important for a variety of reasons, including restricting access to those authorised to use it for safety reasons or having been trained, to capturing who has used it for billing and resource auditing. Such technologies can be seen in other “accessible” lab and maker spaces such as the Nottingham Hackspace[4]. In this group, IoT technologies such as biometrics and eye tracking were suggested as an opportunity to measure whether users were paying attention or to make sure they were not doing something improper with the machines, and to use this as part of a future management system.

The group saw utility around training, either how to either automatically facilitate training before granting access to use labs (for example versus with a technician) or once they use it, to see if they are using it properly, to decide if further training is needed and for what percentage of users to inform broader policy.

The Utility of Sensing

The group used the technology cards to enumerate how IoT could, at face value, aid with equipment management for access control, maintenance, auditing and resource allocation. Fingerprint readers or personal key cards could provide reliable and convenient access control mechanisms. Various sensing and monitoring elements, connected to the Internet, could help with managing maintenance, particularly breakdowns and reordering of consumables, for example replacement parts or filament, to make management more convenient. Monitoring elements could notify how often it was used, by how many people, how often servicing needs to be conducted; preventing people from using machines when there are close to needing service, and reducing downtime.

Integrated monitoring was felt to aid the management of what is being produced using the machines, particularly with 3D printing that increasingly raises concerns of appropriateness, cost and liability. The part could breach the intellectual property rights of someone else, and this in turn raises questions about whether it would be for personal or University business, or the user could be a PhD student who lacks budget to pay for the resources implicated. Another discussion was about if CAD files would need to be reviewed prior to being printed and if this could be automated and if it is appropriate to print e.g. if it is components, what are they being printed for – a public performance, research for an academic paper or a firearm, cell phone jammer? The group discussed who should know about this and have authority to decide what should be printed, and if it needs to be budget holders, supervisors, university faculty or even a machine learning system that can decide where the value is in using the machine. This might be valuable in determining if something really needs to be printed or if it is going to work for 16 hours, cost several thousand pounds and be scrapped at the end.

Risks

This group was cognisant of making users uncomfortable or creating “scary systems”, particularly through the use of location tracking of users, or proposing the use of fingerprints versus cards to authenticate their identity. The group had awareness of user rights under GDPR as to what is allowable, particularly in relation to use of fingerprinting and biometrics in this type of system, which cause more concern, and around subsequent use and secure storage, where hacking of stored fingerprints is a concern. Whilst they recognised that cards could be used instead of these systems, they felt that people often forget or lose their cards in Universities (meaning policies around cards for access are not always enforced properly). So, there was a perceived benefit of fingerprints or biometrics that it can be enforced more effectively, and provide a higher level of security.

There is a recognised challenge of providing meaningful transparency and how this relates to consenting to being tracked by the system, giving enough information about what is being collected, why and so forth. Importance of choice in relation to consent was discussed, where using machines in a workplace setting users may not be fully aware of everything that is being collected, nor the implications of implicitly being assessed on how machines were being used, perhaps leading to judgements being made as to how long it takes an employee to complete a task. So, in the process of using a 3D printer, the granularity of the information being collected, who it is shared with became concerns. Is it shared with finance and central university bodies to check the payment is authorised? If it is kept locally, how long are files stored for? how are different user groups treated similarly or differently (e.g. staff versus student rights on the printer)? These are all important decisions that should be built into the design from the outset. These sit alongside the more familiar implications of using sensors accessibly despite their limitations (e.g. how eye tracking to determine attention might work differently for the different ways people view screens or their eye moves).

Safeguards

The group recognised the need for increased meaningful transparency through a statement that documents how data is handled e.g. if it is kept locally with no external access, it is encrypted etc. There was a sense that an issue of any given consent form is the tension that to explain everything that is needed makes it too long but making it meaningful requires making it simpler/easier to digest. The group recognised the challenge of black boxes, and the issue of asking users to just trust in the black box, as opposed to actually showing what it is doing and how it is generating data, which might help make it more accountable.

Our group used the cards to describe the technical storage requirements for personal data coming from IoT enabled devices, for example storing any information, such as finger prints with high level 256-bit encryption and only keeping hashes of these. Limiting access to logs of printing, to address risks of data breaches, was another approach. They also recognised the risks of keeping a database of fingerprint data in order to respond to access requests from police and other authorities, and the challenges of any communication between devices speaking to each other, e.g. between 3D printer and other lab devices, where that information is being relayed via the cloud.

Our group described a desire to ensure that any use of data from a system is not going to be punitive, and instead aggregated, as the vested interest in that data will differ depending on if it is from service provider or user perspectives. The former will be more concerned with running costs etc., and the latter perhaps on if they use it properly or not for their own development. Anonymisation, pseudonymisation and data obfuscation were identified as being key safeguards, particularly in guarding against data being used punitively by a variety of stakeholders.

Challenges and Conclusions

The workshop prompted the group to consider the cultural changes that would be required to get users on-board with shifting to new ways of tracking machines, explaining and communicating the benefits of less downtime, and doing it progressively to get people used to the changes. Giving progressively more information in exchange for gathering more data was a debated strategy. This also prompted thinking about how concepts like consent must be expected to change over time, not just collecting once and that is it forevermore, but reobtaining consent periodically.

In terms of their future practice, at the end of the workshop participants mentioned in the future they will need to be more reflective when designing systems, and pause to think – should we collect and use this data? One participant stated:

“So, I think being really conscious as you design in these systems, what should you be using? What data should you be collecting…reasonably, and what data do you really need to offer the service that you want to offer, compared to, what data could you be collecting, and what could you be using it for? ”

In addition to this, they saw the need to create more meaningful and transparent relationships with service users providing them with information they can action and use, to build in safeguards by design. This could include moving away from models of collecting data now in the hope it will be useful later, to creating codes of conduct and guidelines for data driven design, training about how GDPR really impacts their work, and also creating privacy impact assessments that are more substantive than procedural. They also felt there can be a disconnect between high level policy and implementation by designers who may have to deal with risks themselves, balancing this against the reality that it is not feasible to expect all designers to become GDPR experts.

The groups appreciated the role of our cards to evaluate their system as they helped to stimulate thought, making it real and reminding them of issues that might otherwise be forgotten. It was more accessible than going to the GDPR source material, as it doesn’t assume you are an expert, and is more pragmatically orientated. One participant made the analogy of it is more like learning by example when using a new television, instead of reading the whole instruction manual before switching it on. They also saw real world value for the cards, in terms of using them with developers and business analysts before developing a project, but also six months in, to evaluate impacts longitudinally.

To conclude, then, the workshops were a valuable experience both for us as researchers and for the participants to unpack and challenge some of the assumptions around the integration of IoT into universities and research projects, and how this speaks to broader challenges than just the technology – from participatory design and engagement with all stakeholders, to policy and obligations. The cards provided a mechanism to scaffold detailed reflection and assessment of issues in a fairly short time frame (the entire workshop was 1.5 hours including 30-minute overview at the beginning). As we’ve found in previous work, the ability of cards to translate complex ideas into a more accessible form is valuable, as is their ability to enable the rapid ideation and exploration of new ideas, and we urge anyone interested in our project to give the Moral-IT and Legal-IT decks a try, to see how they might help with your work (and of course, let us know how you got on).

[1] https://www.horizon.ac.uk/project/privacy-by-design-cards/

[2] https://lachlansresearch.com/the-moral-it-legal-it-decks/ developed with Dr Peter Craigon within our Horizon Digital Economy Research Institute funded MoralIT: Enabling Design of Ethical and Legal IT Systems Project.

[3] https://lachlansresearch.files.wordpress.com/2018/07/process_board.pdf

[4] https://www.youtube.com/watch?v=NlKjFZwW3Ts

[…] reblog of a post that originally appeared on the University of Nottingham Digital Research website documents a research project they funded […]