May 4, 2020, by Hazel Sayers

ICUBE

Collaborative robots – or co-bots – currently rely on physically mechanical, hierarchical instructions delivered explicitly by humans, without consideration to other important characteristics – pose, expression and language. Co-bots therefore lack the ability to sense humans and their behaviour appropriately. I-CUBE has been addressing this challenge and initiated a study to capture the language and gestures used by humans whilst collaborating with robots conducting a simple laundry sorting task. The study enabled the team to examine human interactions in a prescribed situation and collate data to support co-bots learning and improve their sense of environment and objects within in it.

The Team, has submitted a second study to School of Computer Science Ethics Committee, which is awaiting decision.

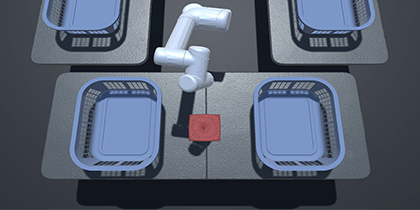

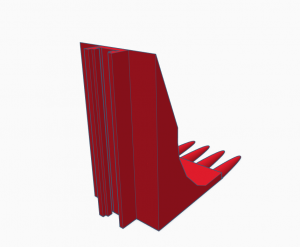

Development of two demonstrators is underway. The first, using kit newly acquired by the Beacon, allows a user to teach a Universal Robotics (UR3) co-bot with a custom-designed grabber to pick up and move items of laundry according to their preference. The UR3 is connected to a server, which also receives input from a camera and microphone. Using these the co-bot can recognise the user’s voice and facial expressions, as well as track a person’s body pose using skeleton tracking. This is what the UR3 will use to determine what the commands and intentions of the user are. A Reinforcement Learning algorithm is used by the UR3 to learn policies for sorting laundry, moving from a default sorting policy to the user’s preference. Facial expressions are used to increase/decrease the weights of rewards/penalties in the learning. The second demonstrator uses the same learning algorithm, but uses a 3D simulation of the robot and its environment (including the clothes), thus allowing the demonstrator to be delivered on a tablet or similar device.

ICUBE was presented at the Connected Everything conference 2019, and In addition to preparing publications the project team has been identifying opportunities and working on bids to further investigate this area of research to combine digital and physical technologies to make products more intelligent and adaptive.

No comments yet, fill out a comment to be the first

Leave a Reply