May 24, 2024, by Brigitte Nerlich

AI safety: It’s everywhere but what is it?

AI Safety is the new black. It is everywhere. As Alex Hern wrote from the Seoul AI safety summit on Tuesday “The hot AI summer is upon us” and with it the hot AI safety summer….

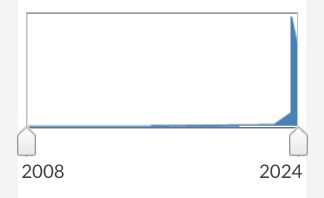

When you look at this timeline for “AI safety” on the news database Nexis, you can see that the topic exploded in English news coverage shortly after OpenAI launched ChatGPT at the end of 2022. Of course, people have been thinking about AI safety (risks, regulations, standards, mitigation, alignment, responsibility, etc.) since the dawn of AI in the 1950s, and even more so since scary stories about existential risk began to spread around 2015. But with the sudden ubiquitous access to AI, or rather a certain type of AI, at the end of 2022 things have changed quite radically and a wave of AI safety talk has gained momentum.

Of course, people have been thinking about AI safety (risks, regulations, standards, mitigation, alignment, responsibility, etc.) since the dawn of AI in the 1950s, and even more so since scary stories about existential risk began to spread around 2015. But with the sudden ubiquitous access to AI, or rather a certain type of AI, at the end of 2022 things have changed quite radically and a wave of AI safety talk has gained momentum.

AI safety – the spreading wave

Being surprisingly proactive, the first government to ride this wave was the UK government which hosted an inaugural AI safety summit in November 2023. This has just been followed by another summit in Seoul taking place while I was writing this, on 22-23 May 2024, co-hosted by South Korea and the UK. And there will be another in France in the autumn…During the Seoul summit an inaugural International Scientific Report on Advanced AI Safety has been published.

At the same time as the first summit, in November 2023, the UK set up the first AI Safety Institute “to cement the UK’s position as a world leader in AI safety” and in May 2024 it established a branch of the Institute in Silicon Valley.

In between lots of things have happened.

In February 2024 the US launched its own AI Safety Institute (its creation had been announced in November 2023).

In March 2024 the European Parliament “approved the Artificial Intelligence Act that ensures safety and compliance with fundamental rights, while boosting innovation” and the UN General Assembly approved its first resolution on the safe use of AI systems.

One should perhaps also mention that in April 2024, the ‘Future of Humanity Institute’ at the University of Oxford closed down. It had been established in 2011 under the leadership of Nick Bostrom, and, whatever one may say about it, it stimulated energetic discussions about AI risks and safety.

A few years later, in 2015, another institute, this time the ‘Future of Life Institute’, founded in 2014, published an open letter, signed by prominent AI researchers and industry leaders, including Elon Musk, calling for robust AI safety research, again stimulating lots of discussions….

The same year, 2015, Musk co-founded OpenAI, a non-profit research organisation, alongside Sam Altman, Greg Brockman, Ilya Sutskever, John Schulman, and Wojciech Zaremba. OpenAI’s mission is to ensure that artificial general intelligence (AGI) benefits all of humanity and its charter says that it is committed to conducting research in a way that is transparent, cooperative with other institutions, and focused on long-term safety. (While I was writing this, the controversy about OpenAI and Scarlett Johansson was in the news…., one controversy in a long line of controversies)

It seems 2015 was the beginning of the AI safety wave which gathered strength in 2022 and is now sloshing over us.

AI safety – the spreading definition

I am not an AI expert. So, I was wondering what AI safety could be. I looked at Wikipedia and found this: “AI safety is an interdisciplinary field concerned with preventing accidents, misuse, or other harmful consequences that could result from artificial intelligence (AI) systems. It encompasses machine ethics and AI alignment (which aim to make AI systems moral and beneficial), monitoring AI systems for risks and making them highly reliable. Beyond AI research, it involves developing norms and policies that promote safety.” That sounds sensible.

However, this is not like seatbelts in cars, I thought. AI is everywhere and so is AI safety – there is AI safety in trains, plans and automobiles, in medicine/healthcare, in finance, in social care, in policy making, in science and in science communication, and not to forget the underlying safety of AI systems like LLMs, the AI assistants and so on…..

Do we have experts in all these safety domains? I suppose we do, but they are not in the limelight. The experts that are in the limelight are those that link AI safety talk to talk about ‘existential risk’, a rather shadowy and undefined concept, in my view, but one that spreads well in discourse about AI.

So, we seem to have a wide variety of particular ‘AI safeties’. But that’s not all. The overall definition of AI safety itself is breaking down, it seems. Some people are worried about the risks of expanding the definition of ‘AI safety’ to cover everything, from, say “nuclear weapons to privacy to workforce skills”, a process that can lead to a loss of meaning. (And I haven’t even mentioned AI safety definitions in various disciplinary contexts, in various geographical/political contexts, in various historical contexts…).

How can one make sense of AI safety? As a first step, one can perhaps distinguish between what I call visible and invisible AI safety.

On the one hand, there is AI Safety with a big S (visible AI safety), much talked about by politicians but rarely understood by them or by lay people like me. It’s, for example nice to hear at the Seoul summit that “Nations commit to work together to launch international network to accelerate the advancement of the science of AI safety”.

On the other hand, there are those doing AI safety with a small s (invisible AI safety), that is, actual people on the ground who engage in the detailed science of AI safety and those engaged in the myriad practices of AI safety. What they do is quite alien to me, just as alien as the alien worlds they try to fathom. Try reading this paper (linked to Anthropic’s work on AI safety) for example. It is purported to be rather groundbreaking.

And then there are civil society bodies that also try to understand wider AI safety issues from climate change to poverty, and that are calling for the public to be involved. One can talk here perhaps about AI safety governance.

Overall then, we have, perhaps, visible AI safety as a political object and invisible AI safety as (interlinked) science, practices and governance/ethics. we have the science of AI safety, practices of AI safety, and the governance of AI safety. And then we have us, the public…

The need for science communication

The public are people like me, trying to understand what’s going on in the world of AI safety. We find ourselves confronted with a rather complex and multi-faceted issue. What we need is some really great science communicator who can explain it all to us.

I for one would love for somebody to interpret pronouncements by politicians such as this tweet by Saqib Bhatti MP written on 18 May: “With the #AISeoulSummit next week, I met with @ucl, @turinginst & others to discuss how to take a proactive approach to AI safety, whilst also managing concerns & potential risks.” I wondered… how can you be proactive about AI safety without also dealing with concerns and risks? It’s all very puzzling.

At the Seoul summit politicians have promised to “forge a common understanding of AI safety”. I can’t wait! I hope it comes with a ‘lay summary’.

Image: By Katsushika Hokusai – Metropolitan Museum of Art: entry 45434, Public Domain

Afterthought: I wonder a bit if AI safety is rather like gun safety. The thing itself is so versatile that it is hard to build in safeguards against all the different ways people might use it. Just as a gun could be used for harmless target practice, or to kill your next door neighbour, something like an LLM could be used to write a soothing letter to your poorly relative, or create a framework of blackmail and disinformation with which to threaten someone. How do the makers of the system restrict its uses, when the potential is always there in the system itself. By contrast, making a parachute safe, for example, is simply a matter of building it right for its one and only use. In that case, safety can more easily rest in the hands of the producer.