December 10, 2021, by Brigitte Nerlich

AI and the (public) understanding of science

This week many people saw their Twitter timelines being swamped by AI generated artwork depicting thesis/dissertation titles. Most of the renditions related to science because that’s what my Twitter timeline is about. But there were also some pertaining to the arts, humanities and social sciences (there was, for example, one depicting the title “Tracking Technology and Society along the Ottoman Anatolian Railroad, 1890-1914“). Some startle you with their vibrancy, others surprise you with the accuracy and seemingly intuitive grasp of the underlying science. They made me think about people’s and AI’s (public) understanding of science.

How it began

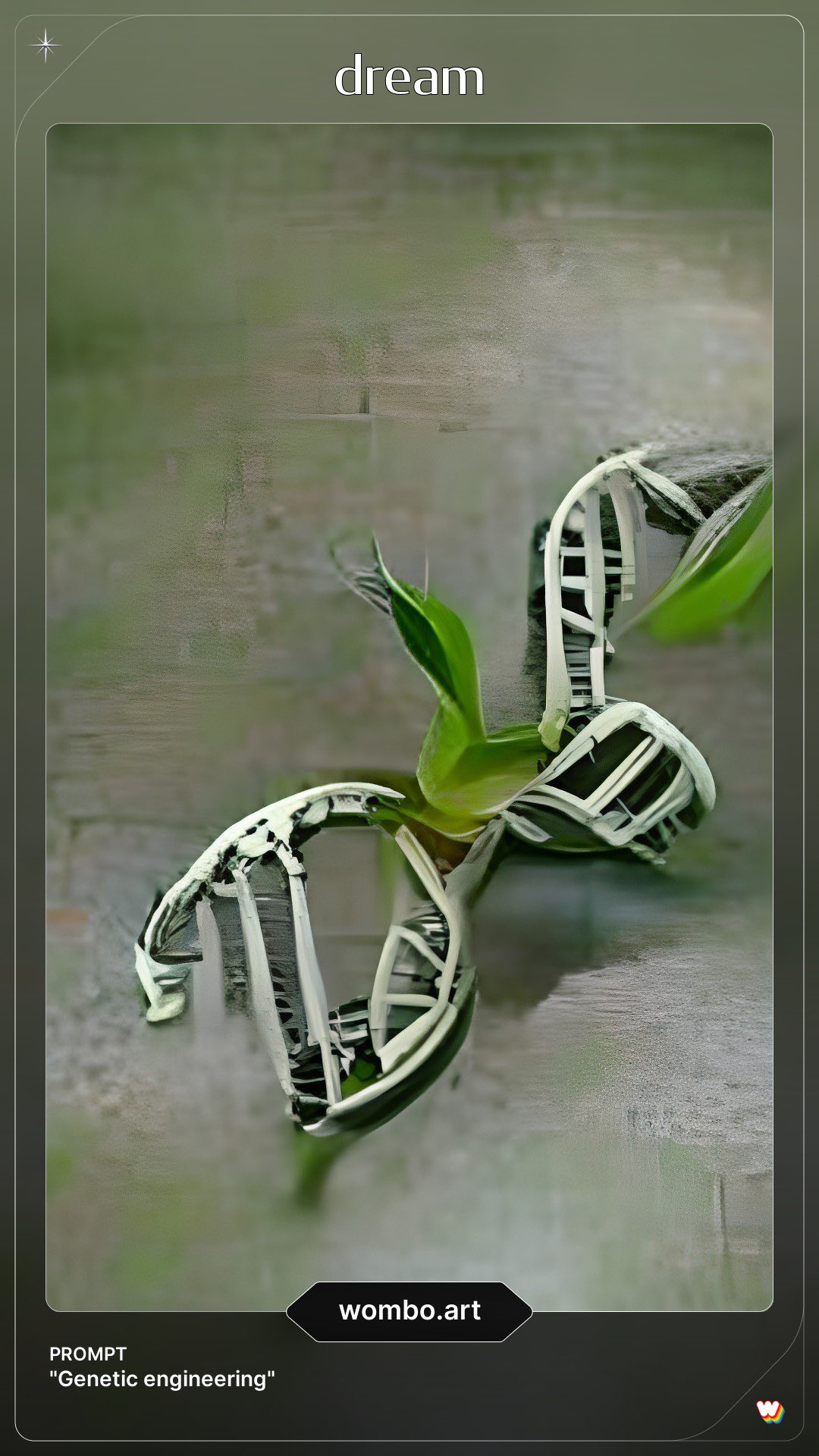

I first started to notice tweets like this around the 5th of December: “Enter your thesis title into this AI artwork platform. https://app.wombo.art” (image: mirror neurons – see right) or the same request on the 6th of December (image: towards a posthuman curriculum), etc. But what really made me sit up and notice was an image of ‘genetic engineering’ tweeted by Matthew Cobb on 5 December which is just too apposite for words, followed on 6th of December by Kevin Mitchell tweeting an image generated for ‘epigenetics’. (I’ll get back to these images) Wow I thought what is going on?

or the same request on the 6th of December (image: towards a posthuman curriculum), etc. But what really made me sit up and notice was an image of ‘genetic engineering’ tweeted by Matthew Cobb on 5 December which is just too apposite for words, followed on 6th of December by Kevin Mitchell tweeting an image generated for ‘epigenetics’. (I’ll get back to these images) Wow I thought what is going on?

AI generated art

I tried to find out a bit more about the wombo art app mentioned in some of the tweets I saw, and, of course, test it out for myself.

I found out that “WOMBO AI is a Canadian artificial intelligence company that earlier this year released an app called WOMBO Dream. The app, available on most online stores and through an online browser, uses AI to combine word prompts with an art style to create beautiful and completely original paintings in a matter of seconds.” And “The app was built by the company behind Wombo AI, the popular deepfake singing tool”, that is an “app that lets you feed in static images to create lip-synced renditions of memeable songs.” The app can be used for all sorts of things, most importantly perhaps to create ‘fan art’. On Tiktok Tusers have been using the app to generate “AI paintings based on song titles from Harry Styles and Taylor Swift, as well as Marvel characters – and the results are absolutely stunning”.

You can choose styles like mystical or steampunk, pastel or vibrant, for example, but also some I don’t understand like ukivoe. Let’s now turn to the use of this app and its styles for making science vibrant. (There is more to say about the financial model underlying all this, but that’s for somebody else to explore)

AI generated science

James Vincent has written an interesting article for The Verge in which he looks at the app and says: “Consider, for example, the image below: ‘Galactic Archaeology With Metal-Poor Stars.’ Not only has the app created a picture that captures the mind-boggling galactic scale of a nebula, but the star-like highlights dotted around the space are mostly blue — a tint that is scientifically accurate for metal-poor stars (as metallicity affects their color).” Image on the right.

Not being a galactic archaeologist (I wish I was!), I wouldn’t have noticed this and, of course, I wouldn’t have known about this either, but the AI apparently did! And that brings us back to genetic engineering and epigenetics.

At the height of the craze, just before the Nr 10 Christmas Party took over my timeline, I saw many really nice renditions of rather complex science topics. Here are some random examples, also demonstrating the rich diversity of science: “Evolution of Mating Systems in Sphagnum Peat Mosses” “The impact of genetic composition and sex on responses to pesticides in Drosophila melanogaster“, “Redox and axial ligation reactions of macrocyclic complexes of cobalt“; “Design and Synthesis of Branched Polymer Architectures for Catalysis”; Adaptive advantages of cooperative courtship in the lance-tailed manakin. The creators of these images, who must know a bit more about these topics than I do, thought they were cool and accurate.

There were two AI generated science images that I specially liked. Matthew Cobb, a zoologist and science writer, created an AI artwork by using the simple key words “genetic engineering”, choosing ‘no style’. The result was striking. It was even more surprising given the fact that Matthew did a radio programme on “Genetic dreams, genetic nightmares” and has also written a book on the topic that will come out soon. It was as if the AI had read his mind, as far as I can read his mind. Strangely, when I, a non-expert, tried the same key words I got something really boring. That’s me shown then. My mind was not one the AI bothered to read.

It was even more surprising given the fact that Matthew did a radio programme on “Genetic dreams, genetic nightmares” and has also written a book on the topic that will come out soon. It was as if the AI had read his mind, as far as I can read his mind. Strangely, when I, a non-expert, tried the same key words I got something really boring. That’s me shown then. My mind was not one the AI bothered to read.

The other cool image was one created by Kevin Mitchell, a neurogeneticist and destroyer of epigenetic myths. He just used the word “epigenetics” and got this – see right.

I am not an epigenetics expert but I know a little bit about the topic, so I could interpret, I think, what the AI was trying to say. Many definitions of epigenetics go like this: “In biology, epigenetics is the study of heritable phenotype changes that do not involve alterations in the DNA sequence. The Greek prefix epi- in epigenetics implies features that are “on top of” or “in addition to” the traditional genetic basis for inheritance” (Wikipedia) And what do we have here? The image shows something over and above genetics! There is also one important metaphor that is used in epigenetic research, which was created by Conrad Waddington in 1956, namely, ‘epigenetic landscape’, and, as far I could see, there is quite a nice epigenetic landscape in this image. Did the AI do all this or is this like me reading the tea leaves?

Beyond core science topics, people also tried out topics related to science, such as “science communication“, which, I think was a flop. Other themes worked better, such as “science and art”, “women in science”, “chemistry education” etc. A topic dear to my heart and to that of Peter Broks also worked rather well in steampunk: “Science in the popular press”. I myself tried out “climate change in the media” and the AI came up with this picture, which I found quite impressive – see left.

AI generated art and public understanding of science

Science communicators are often asked to use art in their engagement activities. I asked myself what contributions this type of AI generated art might make to science communication and the public understanding of science. I certainly had the impression that this tool engaged the scientists and science communicators who used it. Some of the images also engaged me as a lay person when the science behind them was totally unknown to me. There were differences in this engagement though. When I had some knowledge of a science issue depicted, I enjoyed the pictures at a deeper level than when I didn’t have that knowledge. Then I just enjoyed the aesthetics of it all – at least for while, before things became a bit boring.

But what, if anything, does this tell us about public understanding of science? That such understanding depends quite a lot on what we already know, which presents us with a bit of a conundrum, as imparting knowledge is sometimes regarded as just pandering to the ‘deficit model’. However, in my view, for science communication to succeed and public understanding of science to flourish we need to understand on what foundations we (as interlocutors) stand in the first place and take it from there using art, rhetoric, even facts, whatever is to hand, in the right proportions.

What about the AI itself, what about its knowledge and understanding? Actually, it has no ‘knowledge’ and ‘understanding’. “It fundamentally doesn’t and cannot understand what its goal is supposed to be, it only knows Things That Look Like Other Things.” (But what does knowledge and understanding actually mean even for us humans…?) One thing is certain. The AI will recreate a society’s biases alongside that society’s knowledge base and its dominant aesthetics. You can see this in the artwork generated when the AI was prompted with the words “Professor of Philosophy”, which “only yields old white men with white beards”. I tried out ‘public understanding of science’ and got a similar result (style: Ukivoe) – see right below.

AI bias always reflects societal biases and that will only change when we change, including the ways we feed biases, sorry information, into AI. “Knowing how to mitigate bias in AI systems stems from understanding the training data sets that are used to generate and evolve models.” This in turn depends on public knowledge and understanding of science and society. And finally, we also need, of course more efforts to enhance public understanding of AI.

Images with permission from WOMBO.eth (and I did the snowflake in the featured image just for fun because it’s the season)

No comments yet, fill out a comment to be the first

Leave a Reply