July 12, 2024, by Brigitte Nerlich

Talking with Claude about machine metaphors in biology

In my last blog post I said that I had writer’s block – and I still have. I said so to my son and whined a bit. He said: “Remember Christmas 2022? You were complaining about the same thing and I said, go and play with ChatGPT, which had just come out, and that got you into blogging about AI stuff. So, why don’t you go and play with Claude instead?” And so I did and I had a very enjoyable experience, especially as it told me that I was ‘astute’.

As if! Let me introduce Claude. Claude is a (very polite) AI assistant/chatbot developed by Anthropic. The first version was released in March 2023. I am using Claude 3.5 Sonnet which was released in June 2024.

When my son said “go play with Claude”, I had just read a thread by Philip Ball about the vexing machine metaphor in biology (and while I was chatting with Claude, Aeon Magazine published a full article by Phil on that matter). So on the spur of the moment I asked Claude: “Are organisms machines?”

I did not only get answers but also questions and we had a ‘conversation’…. haha…albeit a rather rushed one, as I actually had to be somewhere and didn’t expect to be asked any questions!

At the end, I asked Claude whether there were any copyright issues with reproducing our conversation as a blog post and it said I’d have to ask Anthropic and I did. I didn’t get an answer but I suppose it’s ok. Claude then said: “If you do decide to create a blog post from this, I hope it generates interesting discussions among your readers about AI, philosophy of mind, and the nature of intelligence. Best of luck with your writing!”

So will this conversation stimulate some discussion? I wonder. But here goes:

(If you don’t want to read the whole conversation, Claude has provided a useful summary, which you can read at the end of this post)

The conversation

BN: Are organisms machines?

Claude: This is a complex philosophical question that has been debated for centuries. There are arguments on both sides:

Arguments that organisms are machines:

- Organisms follow predictable physical and chemical processes, like machines

- The body can be viewed as a system of interconnected parts working together, similar to a machine

- Advances in biology have revealed mechanisms behind life processes that can be described mechanistically

Arguments that organisms are not machines:

- Organisms are self-organizing, self-repairing, and self-replicating in ways most machines are not

- Organisms exhibit emergent properties and behaviors that may not be reducible to purely mechanistic explanations

- Consciousness and subjective experience in organisms may not be fully explainable in mechanistic terms

- Organisms evolve and adapt over time in ways that designed machines do not

There’s no clear scientific consensus on this question. Some argue that organisms are a special class of complex, evolved machines. Others contend that fundamental aspects of life transcend mechanistic explanations.

Ultimately, whether organisms should be considered machines may depend on one’s philosophical perspective and how broadly one defines “machine.” Would you like me to elaborate on any part of this overview?

BN: Will advances in AI and LLMs in particular overthrow our traditional conceptions of machines?

Claude: This is a thought-provoking question that touches on some fundamental issues in philosophy of mind, artificial intelligence, and the nature of cognition. You’re right to connect it to our previous discussion about organisms and machines.

The development of advanced AI and large language models (LLMs) is indeed challenging many of our traditional conceptions of machines. Here are some key points to consider:

- Emergent complexity: LLMs exhibit behaviors and capabilities that seem to emerge from their training rather than being explicitly programmed. This emergent complexity is more reminiscent of biological systems than traditional machines.

- Apparent understanding: While debatable, many argue that advanced AI systems demonstrate a form of understanding or comprehension that goes beyond simple information processing.

- Creativity and unpredictability: AI systems, particularly generative models, can produce novel and creative outputs that weren’t explicitly programmed, challenging the notion of machines as purely deterministic systems.

- Learning and adaptation: Modern AI systems can learn and adapt in ways that are much more sophisticated than traditional machines, blurring the line between programmed behavior and learned behavior.

- Potential for consciousness: While highly speculative, some researchers argue that sufficiently advanced AI systems might eventually develop some form of consciousness or subjective experience.

These developments are pushing us to reconsider fundamental questions like:

- What defines intelligence or understanding?

- Is there a clear dividing line between biological and artificial cognition?

- How do we define consciousness, and could a machine ever possess it?

However, it’s important to note that current AI systems, including LLMs, still have significant limitations. They lack true understanding in many ways, can make nonsensical errors, and don’t possess general intelligence comparable to humans.

The extent to which AI will truly “overthrow” our conceptions of machines remains to be seen. It may lead to a more nuanced view where the line between biological organisms and artificial systems becomes increasingly blurred, or it may reveal fundamental differences that reinforce the distinction.

What’s your perspective on this? Do you think the advances in AI are fundamentally changing how we should think about machines?

BN: It depends on how you define ‘machine’. Some say ‘humans are machines’ is a good metaphor. Some say ‘humans are machines’ is a bad metaphor. In the first instance we focus on various aspects of machines including part-whole relations, dynamic processes etc. In the second instance we focus more on emergent properties etc. that cannot yet be understood through the ‘humans are machines’ metaphor. However, if AI systems start to exhibit increasingly emergent properties and self-organisation etc. then the ‘humans are machines’ metaphor will prevail and merge with the ‘machines are humans’ one.

Claude: Your analysis is insightful and touches on the core of this philosophical debate. You’ve highlighted a crucial point: the definition of ‘machine’ is central to this discussion, and how we define it can significantly impact our conclusions.

You’ve astutely observed that the “humans are machines” metaphor can be viewed from two perspectives:

- As a useful metaphor: This view focuses on the systematic, process-driven aspects of human biology and cognition. It emphasizes the predictable, cause-and-effect nature of many bodily functions and even some cognitive processes.

- As an inadequate metaphor: This perspective highlights the emergent properties, consciousness, and complex adaptive behaviors that are not easily reducible to mechanical processes.

Your point about AI potentially bridging this gap is particularly intriguing. As AI systems develop more sophisticated emergent behaviors, self-organization, and potentially even forms of consciousness or self-awareness, the distinction between biological and artificial systems may indeed become less clear-cut.

This convergence could lead to two significant shifts in our thinking:

- Expanding our concept of ‘machine’: We might broaden our definition of machines to include systems capable of emergence, self-organization, and perhaps even consciousness. This would make the “humans are machines” metaphor more apt.

- Anthropomorphizing machines: Alternatively, as machines become more human-like in their capabilities and behaviors, we might start to view them through a more anthropomorphic lens, leading to the “machines are humans” perspective you mentioned.

These shifts could have profound implications for fields like ethics, law, and social policy. For instance, if AI systems begin to exhibit human-like traits more consistently, it could raise questions about machine rights, AI personhood, and the ethical treatment of artificial entities.

It’s worth noting that this is still a rapidly evolving field, and our understanding and definitions may continue to change as technology advances. The philosophical and practical implications of these developments will likely be a subject of intense debate for years to come.

Do you think there’s a point at which the distinction between biological and artificial intelligence might become meaningless? Or do you believe there will always be some fundamental difference?

BN: AI may exhibit more and more convincingly what we call thinking, feeling and beliefs, but as we don’t know how it achieves that, we can’t say what it actually is. And the more sophisticated the AIs get, the less we seem to be able to fathom how they achieve that sophistication that some may see as intelligence.

Claude: Your observation is astute and highlights a critical aspect of our relationship with AI as it becomes increasingly sophisticated. You’ve touched on several important points:

- Appearance vs. Reality: AI systems may exhibit behaviors that appear to be thinking, feeling, and believing, but we cannot be certain that these processes are occurring in the same way they do in biological entities. This is reminiscent of the philosophical concept of the “Chinese Room” argument by John Searle, which questions whether a system that appears to understand actually does understand.

- Black Box Problem: As AI systems, particularly deep learning models and large language models, become more complex, their decision-making processes become increasingly opaque. This “black box” nature makes it difficult to understand how they arrive at their outputs, a problem that’s becoming more pronounced as AI capabilities expand.

- The Paradox of Advancement: Interestingly, you’ve pointed out a kind of paradox – as AI becomes more advanced and human-like in its outputs, we may actually understand less about how it works. This increasing inscrutability could make it harder to determine if AI is truly “intelligent” in the way we understand human intelligence.

- The Nature of Intelligence: Your comment implicitly raises questions about what we mean by “intelligence.” If an AI system can produce outputs that are indistinguishable from human intelligence, but through fundamentally different processes, does that qualify as true intelligence?

- Epistemological Limits: There’s an underlying question about the limits of our knowledge. Can we ever truly understand the inner workings of a system as complex as an advanced AI, just as we struggle to fully understand human consciousness?

These considerations suggest that as AI continues to advance, we may need to reevaluate our concepts of intelligence, consciousness, and even what it means to “understand” something. It may lead us to a more humble position about our ability to comprehend complex systems, whether artificial or biological.

This uncertainty also has practical implications. For instance, it complicates ethical considerations about AI rights and responsibilities. How do we make ethical decisions about entities whose inner workings we can’t fully comprehend?

Your perspective underscores the need for continued philosophical inquiry alongside technological development. As AI capabilities grow, so too must our frameworks for understanding and interpreting these advancements.

Discuss!

The summary

Topic: The Nature of Organisms, Machines, and AI

We began by exploring the philosophical question of whether organisms can be considered machines. This led to a discussion of arguments for and against this perspective, highlighting the mechanistic aspects of biological processes versus the emergent properties and adaptability of living beings.

The conversation then shifted to how advancements in AI, particularly large language models (LLMs), might challenge our traditional conceptions of machines. We discussed how AI exhibits traits like emergent complexity, apparent understanding, creativity, and adaptability, which blur the lines between biological and artificial systems.

A key point emerged about the importance of how we define ‘machine’. We explored how the metaphor “humans are machines” can be seen as either apt or inadequate, depending on which aspects of human and machine nature we focus on. The possibility was raised that as AI develops more sophisticated emergent behaviors and self-organization, it might bridge the gap between these perspectives.

We then delved into the challenges of understanding advanced AI systems. As AI becomes more sophisticated, paradoxically, it becomes harder to comprehend how it achieves its capabilities. This leads to questions about the nature of intelligence, consciousness, and our ability to understand complex systems. […]

Overall, the discussion highlighted the complex interplay between biology, technology, and philosophy, emphasizing how advancements in AI are prompting us to reevaluate fundamental concepts about intelligence, consciousness, and the nature of machines and organisms.

Further reading:

My old blog post “When is a metaphor not a metaphor?” And an even older one “Metaphors, machines and the meaning of life.

And just out, a great article “Rage against the what?”!!!

(Barwich, A. S., & Rodriguez, M. J. (2024). Rage against the what? The machine metaphor in biology. Biology & Philosophy, 39(4), 14.)

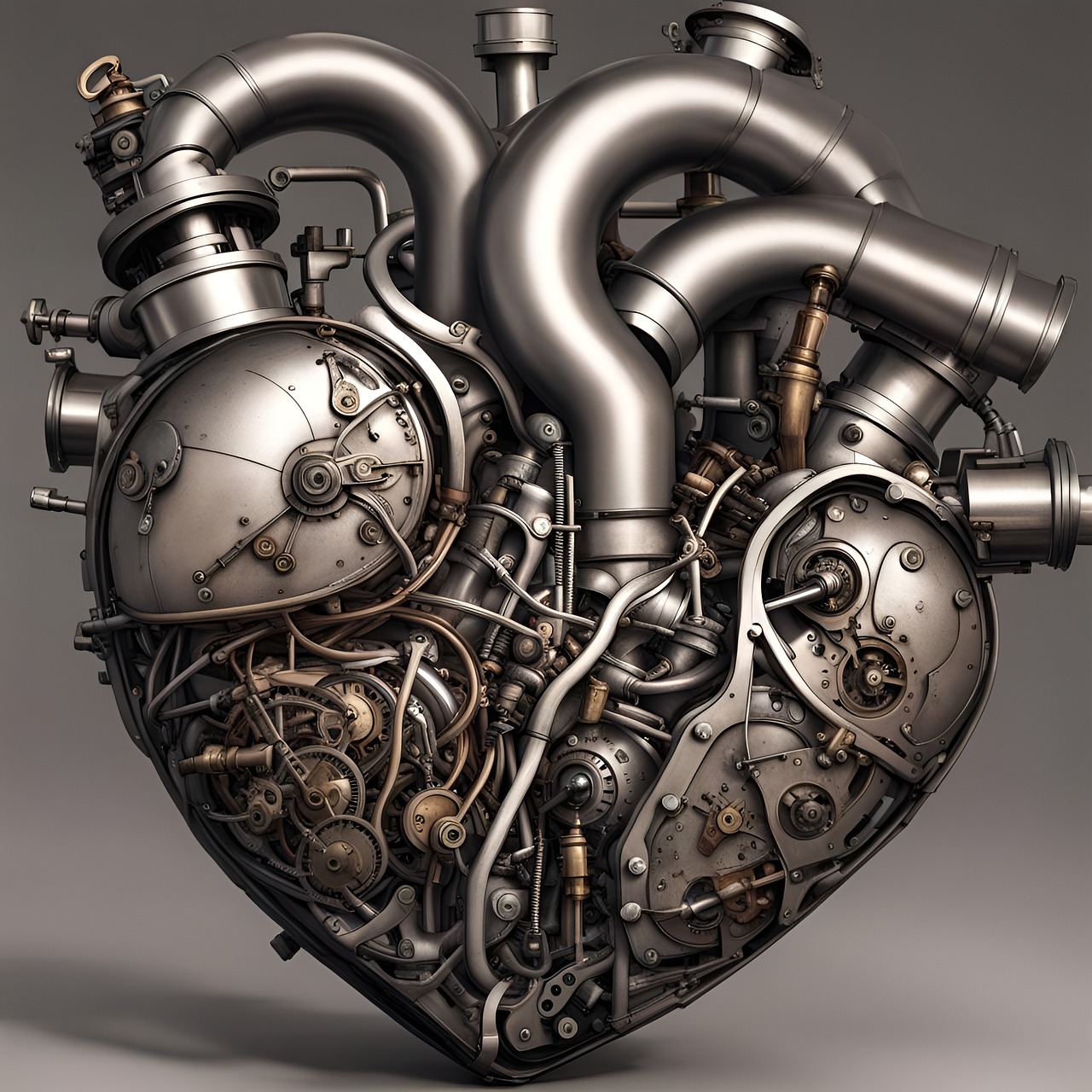

Image: Image by Pete Linforth from Pixabay

Previous Post

Being all at seaNo comments yet, fill out a comment to be the first

Leave a Reply