June 28, 2024, by Brigitte Nerlich

Metaphor identification: From manual to automatic

I have written about metaphors for AIs and LLMs (large language models) like ChatGPT, but I don’t know much about what one might call the mechanics of metaphor recognition, identification and interpretation inside LLMs. So, I wanted to find out and went down a rabbit hole – I never quite reached the bottom….

Metaphor and MUNCH

I googled a bit and found one article that seemed to be quite central to the topic. This was confirmed when I asked ChatGPT about LLMs and metaphor and the only real article it cited was the one I had found myself. This was a preprint by Xiaoyu Tong, Rochelle Choenni, Martha Lewis, and Ekaterina Shutova entitled “Metaphor Understanding Challenge Dataset for LLMs”.

When I looked at it I was quite surprised to find myself on relatively familiar ground. It started with George Lakoff’s work on conceptual metaphors, the dominant root of all recent metaphor research. It also mentioned work carried out at the VU Amsterdam where researchers around Gerard Steen have assembled and annotated a corpus of metaphors which is, they say, the “largest available corpus hand-annotated for all metaphorical language use, regardless of lexical field or source domain”.

The article by Tong et al. presents its own dataset called MUNCH (Metaphor Understanding Challenge Dataset) based on metaphor samples selected from the VU Amsterdam Metaphor Corpus and focusing on sentences with metaphorical words that could be paraphrased by a single word; e.g. ‘stir excitement’ might be paraphrased as ‘provoke excitement’.

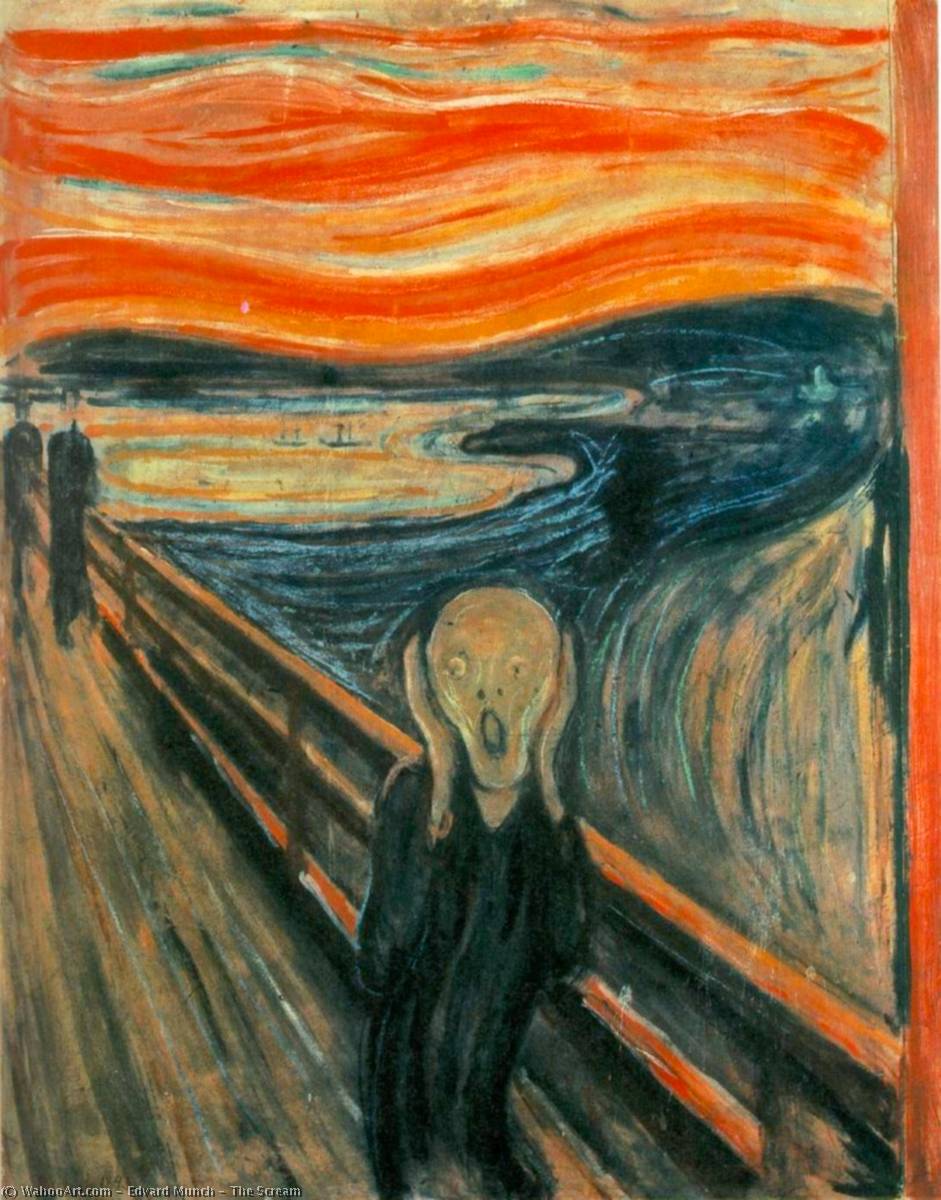

Reading this, I thought that it would be great to have an overview of the research leading from Lakoff in the 1980s to LLMs now. I started to follow the trail and things got extremely complicated and I felt a bit like the person in Edvard Munch’s painting. (And I bet I got many things wrong! I am skimming over the top of a lot of conceptual icebergs – so follow the links)

Conceptual metaphors and pattern recognition

In 1980 George Lakoff and Mark Johnson wrote a seminal book called Metaphors We Live By which jump-started modern metaphor analysis and cognitive linguistics.

They made clear that metaphors are everywhere and that they are based on mappings between fields of knowledge or experience, between, say anger and heat (‘She got hot under the collar’), relationships and journeys (‘They had reached a dead end’), time and money (‘I don’t want to spend any more time on this’) and so on.

Detecting and interpreting such metaphors in texts or conversations was a rather subjective and intuitive ‘procedure’ based on reading a text ‘literally’ and then pricking up your mental ears and listening for discordant semantic notes, so to speak.

For example, when reading the sentence ‘she battled with cancer’, your literal reading flags up the word ‘battle’. This is because the conventional meaning of the word conjures up images of war and bloodshed, while in this context it must mean something different, like enduring chemotherapy, for example. Then you read the sentence ‘she lost her fight against cancer’ and you realise that something is going on here. In both cases war is involved in the mapping.

This is what Lakoff and Johnson call a ‘conceptual metaphor’. This conceptual metaphor is based on a metaphorical mapping between war (source domain) and cancer (target domain), namely DEALING WITH CANCER IS WAR, where you map aspects of war onto managing cancer. Whether that is an apt metaphor or not doesn’t matter here.

To find such metaphors, both as linguistic expressions in texts or utterances or as conceptual patterns, can be a rather subjective enterprise. During the decades that followed the publication of Metaphors We Live By, people tried to make metaphor identification less idiosyncratic and even automate it.

Automating metaphor analysis

From the 1990s onwards, research in cognitive linguistics and conceptual metaphor theory cross-fertilised with frame semantics, construction grammar and corpus linguistics on the one hand and with computational linguistics, natural language processing research, and (artificial) neural network analysis on the other – all research areas which overlapped with early AI.

In this context, scholars in cognitive linguistics and computer science began to establish and manually annotate big metaphor data banks, from ATT-Meta (linked to an AI group in Birmingham and the work of John Barnden, see below) in the early 2000s to MetaNet established in 2012 (linked to an AI group in Berkeley directed by Srini Narayanan and Lakoff), as well as the Amsterdam corpus and many more.

Research connected with these groups and others also homed in on psychological and neural processing models of metaphorical understanding, at a time when connectionism and neural networks became popular research topics (again). There also were increasing advances in NLP techniques which led from the use of ‘recurrent neural networks‘ to the modern ‘transformers‘ introduced in 2017 and transforming modern AI.

From around 2005, there were two other developments in applied rather than computational metaphor research. Scholars in the UK made efforts to detect metaphors with the help of corpus linguistic approaches which use concordances, frequency counts, keyword analysis, and collocation patterns. A top-down formal procedure for metaphor identification was developed in 2007 at the VU Amsterdam. This was a manual Metaphor Identification Procedure (MIP), which formalised steps for identifying metaphors in text and was used in the establishment of the VU Amsterdam metaphor corpus.

From the 2000s onwards, the rise of statistical methods in NLP led increasingly to the application of machine learning techniques (that is, pattern recognition over data) for metaphor identification. Researchers used annotated corpora to train models to recognize metaphorical language using machine learning (sometimes in combination with construction grammar). Automation builds on annotation and machine learning accelerates the process. (Or as Felix Martin recently said: “This simple recipe – learning algorithms plus computing power plus data – produces prodigious predictive results” – with the emphasis on good datasets)

From around 2004 NLP and metaphor research led to new advances in the automatic processing of metaphor. In 2004 Mason established a computational, corpus-based conventional metaphor extraction system or CorMet. Then, in 2010, Shutova “defined automated metaphor interpretation as a paraphrasing task: Given a metaphorical expression where a word is marked as metaphorically used, the model should replace this word with another word to render a literal paraphrase of the expression.” Shutova is, as we have seen, one of the co-authors of the article I mentioned at the beginning.

More recently, new metaphor databanks have been developed, some involving inferencing, others involving paraphrasing, such as the 2018 Metaphor Paraphrase Evaluation Corpus (MPEC) using deep neural networks and the NewsMet dataset, created by Joseph et al. in 2023 which consists of 1,000 verbal metaphors found in news headlines, along with their literal equivalents.

More recently still, developments in deep learning have advanced the field of metaphor identification by using increasingly sophisticated models that capture complex linguistic patterns. As an IBM website points out: “Deep learning is a subset of machine learning that uses multilayered neural networks, called deep neural networks, to simulate the complex decision-making power of the human brain. Some form of deep learning powers most of the artificial intelligence (AI) applications in our lives today.”

And so to LLMs

LLMs are a type of deep learning algorithm designed to analyse and generate human-like text. These models are trained on incredibly vast amounts of data and use machine learning to recognise and reproduce patterns in language, including linguistic structures, context, and semantic relationships.

Increasingly larger and more sophisticated metaphor databanks come in handy here! As well as the fact that metaphors are patterns!

LLMs can thrive because we have witnessed a gradual convergence of the availability of huge datasets, including large (manually) annotated metaphor datasets, on which they can be trained, and because of great advances in computational, statistical and machine learning approaches, natural language processing, algorithms, artificial neural network research, deep learning research, and so on.

It is in this context that the article I mentioned at the beginning was written. It aims to evaluate and enhance the metaphor ‘understanding’ capabilities of LLMs.

From pie in the sky to practical applications

Over the last few decades, we seem to have come a long way in automating metaphor analysis. In the year 2000, John Barnden, one of the early pioneers in AI and metaphor research, edited a special issue of the journal Metaphor & Symbol with Mark Lee on metaphor and artificial intelligence in which they said:

“Because technological developments are making it increasingly possible and important to include AI elements in publicly available or commercial software, and natural language processing is an important aspect of user friendliness, issues such as metaphor are increasingly becoming looming practical obstacles as opposed to pies in the distant sky. In particular, the development of large text and speech corpora and of tools capable of dealing with their immense size make it reasonable to embark on developing methods for the large-scale semiautomated analysis of metaphor in real discourse.” (p.2)

The recent progress in automated or semiautomated metaphor recognition, identification and interpretation will make AIs like ChatGPT better at mimicking human reasoning, understanding and communication, for good or for ill.

I myself will probably continue to do my metaphor analysis manually, especially since I still don’t understand what I called at the beginning the inner mechanics of metaphor identification within LLMs. One thing is certain though, the models may well be able to recognise the conceptual metaphor ANGER IS HEAT, but they will not be able to feel it. And I try to get a feel for things.

If you want to see how ChatGPT deals with metaphor, I have provided one example in the comment section.

Image: Edvard Munch, The Scream

I asked ChatGPT two questions about metaphor. In one question I asked about a famous metaphor and that went well. Then I asked about a sentence using an expression based on a more hidden conceptual metaphor and that didn’t go so well.

First the famous metaphor. I asked what the sentence ‘the world is a stage’ means. Here is the response:

“The world is a stage” is a famous metaphor from William Shakespeare’s play “As You Like It” (Act II, Scene VII). This metaphor is part of a monologue delivered by the character Jaques, which begins with the line, “All the world’s a stage, and all the men and women merely players.”

Meaning and Interpretation:

Life as a Performance:

The metaphor suggests that life is like a play, where everyone has roles to play. Each person acts out their part in the grand drama of life, moving through different stages and scenes.

Roles and Phases of Life:

Just as actors take on different roles and costumes, people go through various stages of life, each with its own responsibilities, behaviors, and expectations. Jaques outlines these stages as the seven ages of man: infancy, schoolboy, lover, soldier, justice, old age, and extreme old age, which he describes as a second childhood and mere oblivion.

Ephemeral Nature of Life:

The metaphor also highlights the transient and performative nature of life. Just as a play eventually ends, so does life. It underscores the idea that our actions and roles are temporary.

Broader Implications:

Social Roles: This metaphor can be interpreted to reflect the social roles people play, suggesting that much of human behavior is performative, dictated by societal norms and expectations.

Philosophical Perspective: From a philosophical viewpoint, it speaks to the nature of existence and identity, questioning how much of what we do is genuine versus how much is influenced by the ‘scripts’ provided by society.

Literary Significance:

Shakespeare’s use of this metaphor is a powerful commentary on human existence, contributing to the timeless appeal of his works. It invites readers and audiences to reflect on their own lives and the roles they play.

For a deeper exploration of this metaphor and its context in Shakespeare’s work, you can refer to the original play “As You Like It” and various literary analyses available in academic literature and online resources such as SparkNotes and Shakespeare Online.

Second the not more hidden metaphor:

What does “I don’t want to spend time on this mean”

ChatGPT

“I don’t want to spend time on this” means that the person does not wish to allocate their time or effort to the particular task or subject being discussed. It implies a reluctance or unwillingness to engage with the matter at hand.

Does the sentence “I don’t want to spend time on this” contain a metaphor?

ChatGPT

No, the sentence “I don’t want to spend time on this” does not contain a metaphor. It is a straightforward expression of a preference or desire not to allocate time to a particular activity or task. There is no figurative language or metaphorical comparison in the sentence; it is a literal statement.

Doesn’t this sentence use the conceptual metaphor time is money?

ChatGPT

Yes, you are correct. The sentence “I don’t want to spend time on this” does employ the conceptual metaphor “time is money.” In this metaphor, time is treated as a valuable resource, similar to money, that can be spent, saved, or wasted. Using the verb “spend” in relation to time reflects this metaphorical understanding of time as a quantifiable and valuable commodity.