June 4, 2018, by Prof Meghan Gray

Why University League Tables are bad for you… and What’s Better

Guest post by Prof Michael Merrifield, Head of School

The School of Physics & Astronomy at the University of Nottingham has just done very well in the Guardian University League Tables 2019. For reasons that will become apparent, I am not going to say how well (if you really must know, go and look it up), but it is important that you understand that we were near the top, to make it clear that what I am about to write was not motivated by sour grapes because we did badly.

University league tables are a terrible way to choose where to apply for a university place. Why? Well, there are two main reasons, one to do with the way the tables are compiled, and one that is an intrinsic shortcoming of the very idea of a league table.

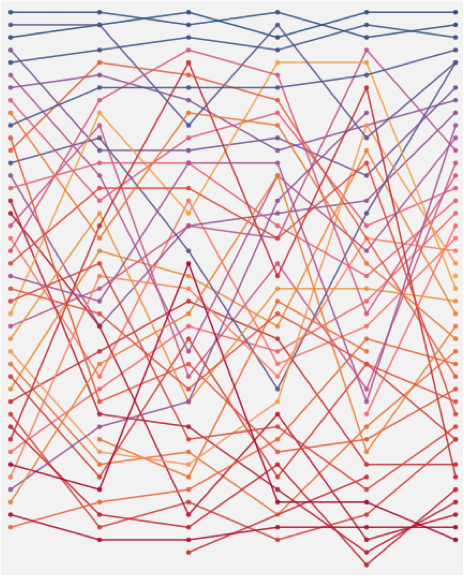

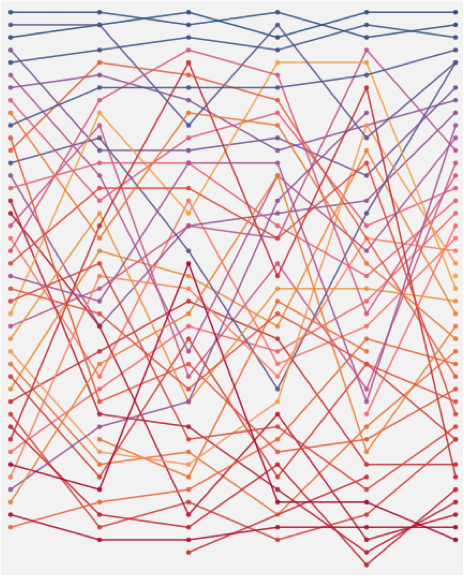

First, there is the way that rankings are made. Look at who compiles these tables. They are almost all put together by newspapers or other publications that need a story to go with the league table. “This year’s table much the same as last year’s” is not going to sell many papers as a headline. So, they have a vested interest in producing a table that changes significantly every year, and, unsurprisingly, that is exactly what they produce. The figure below, for example, shows the rankings of UK physics departments over a number of years in that Guardian table in which we did so well this year. Notice how much physics departments move around: one rocketed up from 35/42 to 5/44 in two years before plummeting back to 38/43 in last year’s table. Now ask yourself what the real timescale should be on which a physics department changes. Very properly, we are generally quite slow-moving organisations – we don’t arbitrarily change the syllabus every year, and are careful to introduce changes slowly, with quite a lengthy accreditation process at department, university and even national level through the Institute of Physics. Realistically, this imposes a timescale of maybe 5–10 years on which you would expect anything to change significantly. The volatility that can be seen on much shorter timescales is all down to the way that newspapers choose to construct their algorithms for ranking universities, because they have a commercial interest in doing exactly that. However, from your perspective, in terms of choosing where to study physics, it seems quite implausible that this year’s best choices would be so different from last year’s!

But the problem is actually much deeper than that. Even if one could find an altruistic organisation to produce an “honest” league table that reflects the actual timescales on which physics departments change, it still wouldn’t be a good basis for choosing which university to apply to. The fundamental problem is one of incommensurability. Just as we are taught in physics that it makes no sense to add a distance to a temperature, so it makes no sense to combine the basket of metrics that go into constructing these league tables into a single quantity. When combining them, there is fundamentally no objective way to decide how much weight to give to, say, overall student satisfaction in the National Student Survey versus staff-to-student ratio: is an institution where the students are all happy but taught in large classes better or worse than one where they are miserably individually tutored? This is clearly a matter of individual choice, but even at individual level there is no objective right answer. As soon as someone produces a tidy ranked list of departments, they are making such an arbitrary scientifically-illiterate judgement for you.

But the problem is actually much deeper than that. Even if one could find an altruistic organisation to produce an “honest” league table that reflects the actual timescales on which physics departments change, it still wouldn’t be a good basis for choosing which university to apply to. The fundamental problem is one of incommensurability. Just as we are taught in physics that it makes no sense to add a distance to a temperature, so it makes no sense to combine the basket of metrics that go into constructing these league tables into a single quantity. When combining them, there is fundamentally no objective way to decide how much weight to give to, say, overall student satisfaction in the National Student Survey versus staff-to-student ratio: is an institution where the students are all happy but taught in large classes better or worse than one where they are miserably individually tutored? This is clearly a matter of individual choice, but even at individual level there is no objective right answer. As soon as someone produces a tidy ranked list of departments, they are making such an arbitrary scientifically-illiterate judgement for you.

So, how can you make a reasonable judgement about where to apply? Well, firstly, although the combined data from which the league tables are constructed are, for the reasons mentioned above, largely nonsensical, the individual aspects can be useful. For example, if it is important to you to go to a university that helps students most to improve from their current level of attainment, then the “value-added” scores that these tables compile can be useful, as they quantify whether the institution manages to raise the attainment of weaker students to get good degrees.

Be warned, though – the quality of the data is not always what you might expect. A number of years ago, I noticed that Cambridge obtained the highest value-added score in one of the newspaper league tables. Since they are not famous for taking weaker students, this seemed very strange, so I contacted the compiler of the table to ask how this happened. His answer was enlightening: their proxy for prior attainment was the number of A-levels that incoming students had, and since Cambridge takes a disproportionate number of students who have taken other qualifications like the International Baccalaureate, these students were recorded as having no prior qualifications, and yet they generally did well in their degrees!

Finally, though, here’s the real secret. The best way to find out where is a good place to study physics is… by asking other physics departments. Each will, of course, tell you that they are the best in the country (in the case of the University of Nottingham, it is even true!), but when you visit on a university open day or contact their admissions tutor by email, ask them where the other good places are. We work together a lot, share expertise, exchange external examiners to oversee each other’s degree programmes, recruit PhD students from other physics departments, and spend a lot of time nosing into each other’s programmes to see if there is anything good that we could “borrow” for our own provision, so we really do all have a pretty good overview of the landscape of physics in the UK. Of course, just giving you the names of a few other universities faces the same problem as the league tables in reducing the question to a single variable, but spend a little time engaging with us, and we should be able to give a much more nuanced and individual recommendation than any newspaper league table could ever manage.

An excellent critique of league tables with extra information about their weaknesses that I was no aware of before. Thank you very much indeed for this very thoughtful and useful blog post.