December 3, 2013, by Teaching at Nottingham

Feedback as dialogue

Dr Richard Tunney: “The School of Psychology has made a number of changes to the feedback that students receive on their assessed work. This has been largely driven by feedback from students to us in Learning Community Fora (and their predecessors) and the National Student Survey. I suppose we could think about the quality of exam feedback from students’ perspective on a continuum. At one end the student might receive their grade via an anonymous computer system. This might be the average of the module and not the individual marks for each component. So a student might receive a D with no explanation as to why that mark was awarded or how they might improve their work. At the other extreme a student might sit with their examiner discussing the relative merits and failings of a written answer in a one-to-one meeting, perhaps over a copita of sherry.

“Until recently students in Psychology received no comment on their examinations other than the actual mark. Nottingham wasn’t unique in this approach that seemed to be the norm across many other universities. This streamlining of assessment may well have been an unintended consequence of the expansion in HE around the turn of the century. Students responded to this with increasingly vocal demands for more feedback on their work.

“However change seemed to be a long time coming, in part due to an awareness of the perceived risks of examiners engaging in dialogue with their students. When we did move, we adopted a model in which examiners wrote a few sentences of feedback for each essay using specially printed carbon copy sheets. These are then handed out to student by administrative staff and exams officers in special sessions rather than one to- one with the examiners.

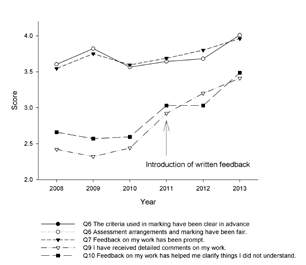

Figure 1: The NSS scores on the item relating to

quality of feedback over the last 6 years. The

arrow indicates the year in which exam feedback

was introduced.

“This change has been reflected in improved NSS scores relating to feedback – see Fig. 1. One open comment from the most recent NSS stated that “Exam feedback reports are very helpful”, but despite the extra efforts we haven’t improved as much as we would like and still get comments like “Exam feedback was rushed and didn’t make much sense. Lecturers don’t give much advice on what they want from an essay.” So what are the problems? As the latter comment suggests among them is the increase in the time taken to mark the scripts and to write comments on them.

“This is particularly problematic for larger modules that often have 250 or more students, each writing two essays, and only a small team of lecturers. Bearing in mind, of course, that each year the window for returning marks to the Examinations Office seems to get shorter with the consequence that feedback might default to a restatement of the marking criteria (e.g. ‘shows/does not show evidence of wider reading’), or that it might be seen as a justification of the mark awarded rather than recommendations for improvement.

“Perhaps the biggest problem is that feedback should be a dialogue between the student and their examiner and that dialogue should be about congratulating students on good work and pointing out where improvements can be made. It’s not really very useful to a student to be given a comment about their work being pedestrian if they’re not told how to make it lively, interesting and informative. I often get the impression that examiners are reluctant to engage in feedback as dialogue out of a fear, misplaced or otherwise, that the dialogue becomes a negotiation or even a basis for appeal. This fear, along with the number of potential consultations, is one reason why we deliver our written feedback to students anonymously. Despite our intentions we haven’t created the best of all possible systems for delivering feedback to students. It has created additional work for the examiners and can be impersonal. But it has bedded in and is a significant improvement on what we had before. An obvious questions however is how can we measure the effectiveness of feedback? Two options spring to mind, an improvement in NSS scores relating to feedback (see Figure 1) and/or an improvement in marks on essays by students. The latter is tricky, if not actually unethical to test so perhaps the proper place for feedback that would circumvent many of the issues that I’ve raised is a greater emphasis on formative assessment in which feedback might actually improve a student’s summative grade.

Dr Richard Tunney

School of Psychology

No comments yet, fill out a comment to be the first

Leave a Reply