August 7, 2017, by Stuart Moran

Investigating Automated Transcription and Translation

The Digital Research Team are working with researchers in the School of Education to explore the potential of automated transcription and translation services. Currently researchers either make use of third-party transcription services or simply transcribe the data themselves. In terms of services, the costs for transcription can be quite high (~£60/hour) which means researchers cannot afford to transcribe all of their data. This means there is a significant amount of research data that remains unused, underutilised or forgotten.

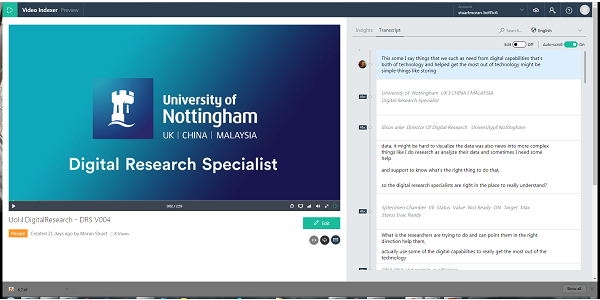

Microsoft are currently running a preview of their Video Indexer Portal which is part of their wider Cognitive Services. The Video Indexer offers automated audio transcription, ability to search through the extracted text, automated language translation to several languages, speaker indexing and many other exciting features. Essentially the portal sees users uploading audio or video files, and it returns an analysis of the content, which includes a (translated) transcript. The transcript (and analysis) for a 1 hour audio file is returned within about 15 minutes of uploading, and is likely to cost between £8 and £15 per hour of audio in the future.

The good news is that everyone with a University of Nottingham e-mail account can tryout the indexer right now (visit here: https://www.videoindexer.ai/ , log in with UoN corporate account), with a generous 40 hours of audio/video available for free. Please note: If you wish to trial the tool in its current state you must review Microsoft’s terms of use. Audio / Video data should only be uploaded to the service if explicit consent has been received from all video/audio participants to do so. As such, I recommend you create a test audio file yourself – perhaps using “Sound Recorder” on your Windows machine.

In July 2017, the Digital Research Team ran a 2 day workshop with researchers in the School of Education to explore the technology in detail, where we discussed and experimented with the service. While the researchers were very excited about its data and analytical capabilities, there were some limitations that we discovered:

- The full text transcript could not be downloaded from the Video Indexer Portal

- The accuracy of the transcription was around 60%, depending on the quality of the audio

- The audio transcription for noisy and group conversations were very poor.

In order to allow us to continue exploring the potential of this technology, we plan to address these issues with some initial fixes. Firstly, a range of high quality audio devices were purchased to help improve the audio quality at point of recording. These devices included:

- Room Mics: Olympus ME-51S Stereo Microphone (multiple of these can be joined together for large rooms)

- Lapel Mics: Lavalier Lapel Omnidirectional (these work with mobile phones)

- 360 Degree Mics: Shure MV5-LTG Digital Condenser Microphone, Blue Microphones Yeti USB Microphone and a Blue Microphones Snowball Omnidirectional (great for pod casting too)

These high quality devices, when paired with best practices such as position of microphones and suitable physical locations, will help improve the quality of the automated transcription. However, this will never be 100% accurate, and we expect the automated transcripts to be used as a simple search mechanism for identifying audio files and data of interest that might be worth being transcribed in the more traditional way.

In terms of the the technical restrictions for the Video Indexer, we are currently working with Information Services and an external consultancy to develop a proof of concept solution. While we are still very much in the early days for this technology, the current vision for the future is a service that sees researchers simply uploading their audio/video files into a specified folder in their OneDrive with a word document transcript automatically placed in another folder ~15 minutes later. It is then possible to have a shared repository of transcripts, allowing researchers across the School / Faculty / University to search through and potentially reuse the research data – in addition to all the text analytics that could be done.

If you are interested in automated transcription or how digital technologies can be used to enhance your research, please get in touch with the Digital Research Team.

Next Blog in Series:

Previous Blog in Series: Transcription Services: A First Step Toward Safe Use

Stuart Moran, Digital Research Specialist for Social Sciences

No comments yet, fill out a comment to be the first

Leave a Reply